Microsoft Copilot

The School has Microsoft Copilot enabled for all staff and students and it's the recommended generative AI tool for all LSE members because of its in-built security and data privacy, meaning that none of your inputs are ever stored and thus make it GDPR and Copyright friendly. The way to use it is via the Edge browser and logged in with your LSE email, which will show a label saying ‘protected’ to confirm you’re using the secure work-appropriate interface.

Claude for Education

In 2025, LSE partnered with Anthropic to enable access to Claude for Education for all students and teaching staff. The scope for this license is education rather than research but teachers can learn a lot about potential research value by experimenting with projects and artifacts. More info here: https://info.lse.ac.uk/staff/divisions/Eden-Centre/Artificial-Intelligence-Education-and-Assessment/Anthropics-Claude-for-Education

Chat GPT Team / Business Plans

For anything work related (or that you wouldn't want to share) where MS Copilot isn’t viable, it’s worth considering adopting the Chat GPT Team, with enhanced security and privacy and requires only 2+ users for any team. The Team (now rebranded as 'business') plan includes all features of Chat GPT Plus but with the addition of limited messages using GPT5 Pro. All data in the Team Plan can be deleted entirely after 30 days and the data is moreover not used to train their models. However, because Open AI still stores the data for 30 days in the US, no personally identifiable information should ever be shared without having had a Data Protection Impact Assessment (DPIA) approved first.

LSE DTS has permitted purchase of the Chat GPT Team Plan for units since 2024 as long as the usage will never entail sharing personal data. The following is the guidance DTS provide before purchasing:

“Each AI provider has its own terms of service, security model, and end user agreements. It’s important to read through these so you understand what any AI service will do with your data, where your data will be stored, and if it can potentially be reused or regurgitated by other users of the service. You need to read these terms of service carefully before proceeding to use an AI service – if you need further help, please talk to the cyber security team via dts.cyber.security.and.risk@lse.ac.uk

In particular, please be aware:

It is highly inadvisable to prompt an AI service with any confidential or personal information.

It is unwise to trust the results as correct, without performing separate verification.

AI is prone to ‘hallucinations’ and may make up data it does not have, or otherwise refer to information inconsistently or incorrectly.

Data may be stored in the US or jurisdictions that do not meet the required standards of UK GDPR, so if any research data is input into it check that doing so wouldn't breach any research data agreement.

Remember that with most AI services there aren't any guarantees of service. ”

Gemini

Google's Gemini models and associated applications have been slowly and steadily becoming more competitive after a sluggish start in 2023, with Gemini 2.5 Pro being top-tier now in 2025. Their NotebookLM platform (especially the AI podcast generation tool) went viral in late 2024, followed by reasoner (Google calls them 'thinking') models similar to Open AI's o series which as of 2025 are still some way off the top but are much cheaper to use. Google released Deep Research in 2024 which enables fast and broad online source searching and was best in class until Open AI released their own 'Deep Research' using o3 in 2025 which is more thorough and capable in how it analyses and synthesises the sources it can access. Google also makes some experimental features available for free in Google's AI Studio, including the streaming realtime tool where a user can chat to a voice LLM while sharing the screen.

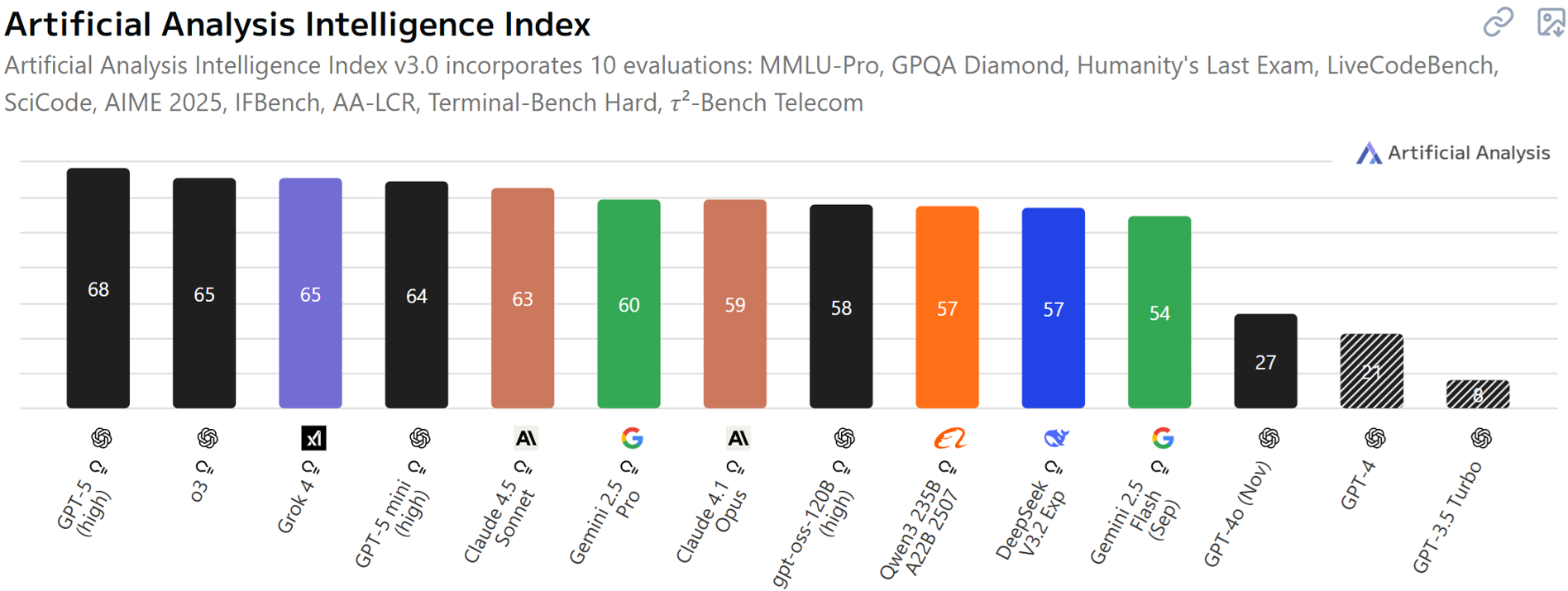

Model Rankings

Below is the latest leaderboard from Artificial Analysis as of Nov 2025.

AI tools dedicated to literature-related research

There have been some long standing literature mapping tools (like Research Rabbit (free), SciSpace (free + paid), Semantic Scholar (free) and Connected Papers (free + paid)) that don’t use LLMs (but use limited semantic NLP) to enhance searches but are nonetheless very useful for building up literature collections.

The most advanced literature review platform that has generative AI integrated as a core feature with a 'made by researchers for researchers' objective is Undermind, with Elicit and Scite AI being strong contenders (see the Literature Review page for more info).