“Perhaps the most promising task that could be outsourced to Generative AI is content analysis of text-based data”

Can Generative AI Improve Social Science?

The above quote is no exaggeration, the potential value of advanced LLMs for content analysis is enormous. The most significant drawback to large scale content analysis is practical: the sheer time and effort required to perform tasks like thematic coding for substantial datasets. While no LLM can be expected to code text data perfectly first time – and nor should you want it to, since the kind of depth needed to interpret, analyse and infer from qualitative data requires intense cognitive engagement – it can be a significant time-saver once you have your set of codes tried and tested and verified by the human researcher on an initial sample. It’s then a matter of giving the LLM explicit and strict instructions and examples on how to code various chunks of text, always with a default 'other' category for cases it can't confidently interpret, and accepting that additional validation steps (especially human expert-based, but also undertaking robustness and consistency tests e.g. with model and prompt perturbations to identify error patterns).

Here's a real example of a prompt to provide up to 4 thematic codes for anonymised student survey qualitative comments:

“I have a 5-column table below, 4 of which are empty which I'd like your help with please. The first column contains anonymised qualitative student feedback comments for my university department. I want you to act as a content analyst coding the themes of each comment. You should assign at least one theme code to each comment, and up to a maximum 4. If none of the existing codes below, you can simply leave it blank, only assign codes with which you are very confident. Please present the results to me in a 5-column table format so I can paste into Excel.

These are the available thematic codes you are allowed to use:

Teacher quality and engagement

Networking and Career Opportunities

Interdisciplinary Learning

Facilities

Skill Development

Engaging content

Sense of Inclusion

Extracurricular Activities

Personal development

Strikes and disruptions

Communication and Clarity

Assessment and Feedback

Course organisation

Pressure and Stress”

For large datasets this is a task that's only viable with a Python script calling an LLM API, given the need for repeated analysis without going over the context limit. But within the Chat GPT interface it's possible to do no-cost 'dry runs' on smaller samples to firm up the prompt and context strategy.

While results will rarely be perfect, on well-curated, high signal-to-noise ratio short form texts, explicit prompts and comprehensive pre-determined classification codes, achieving 90% alignment with human decisions on the same data is viable. In some cases 90% won't be good enough, but in others the value of acquiring a huge dataset that would otherwise not be feasible makes it an acceptable trade-off.

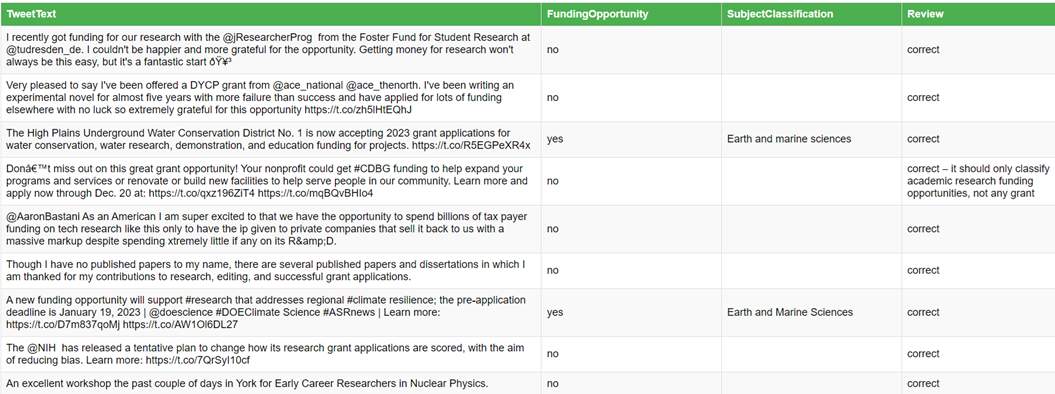

Here's another example experiment for a multi-stage coding tool to classify research funding opportunity tweets into academic disciplines. Using the twitter API to pull in the latest 10 tweets that contain keywords that might relate to research funding opportunities, the first step needed was to ask an LLM via the Open AI API to determine if this is actually a research grant opportunity, or whether it’s just someone tweeting about winning a grant, or a blog article on grant application advice for instance. So the initial prompt for each tweet was:

“Given the following tweet, determine whether it's an upcoming research funding opportunity that someone might be able to apply for (YES) or not (NO). If it is a research funding opportunity, the tweet should contain information about a call for grant applications, a research funding announcement, or other upcoming funding opportunities for research. Scholarships or charity or general public or business funding opportunities do not qualify as research funding. Some tweets might be about someone winning a research grant - this is not a research funding opportunity. Other tweets that aren't actual funding / grant opportunities might be announcing a research grant writing workshop, or any other topic not directly related to an upcoming research funding opportunity.”

The next step, assuming a tweet was deemed by the LLM to be a legitimate research grant funding opportunity, was to classify it into a predetermined list of academic disciplines, which was taken from the Guardian’s University Rankings subject tables:

“Given the following research funding opportunity tweet, classify it into one of the following academic subject groups. You must not deviate from this list, you must pick the best subject classification from this list. If you can't be confident with a subject from this list, just return 'Unsure' instead. Here's the official list: { Accounting and finance, Aerospace engineering, Anatomy and physiology, Animal science and agriculture… }”

The results were then placed into an Excel file for human review. Below is a sample results table from this experiment with review and comments in the final column (right click and open image in new tab to see full resolution):

Ultimately, GPT4 is an advanced language tool so it’s not surprising that it’s effective at working with short form qualitative text data, particularly with clear and explicit prompts and examples. It may require multiple pilot experiments with significant additional prompting and verification steps, but once you have a viable prompt there’s enormous potential for time saving in this arena, more so than any other research task in this guidance.

Copyright considerations

Note the following excerpt from the Legal and Regulatory guidance regarding text and data mining permissions for non-commercial purposes – if in doubt always use MS Copilot or an LSE-only tool rather than risking sharing copyrighted data with a 3rd party commercial AI tool:

"Text and Data Mining: the TDM exception (Section 29A CPDA) permits anyone who has lawful access to in copyright material (e.g. through an institutional subscription) to make copies of it in order to carry out computational analysis for the purpose of non-commercial research. These copies must not be shared with any unauthorised users. Inputting licensed content from library subscriptions into GenAI tools can be interpreted as permissible computational analysis. However, if the tool retains copies of the inputted material this is likely to be interpreted as infringing copyright because it would be accessible to others not covered by the exception - also the TDM exception only covers TDM carried for research for a non-commercial purpose. Please, be aware that the interpretation and application of the TDM exception may be tricky at times, so be careful if you wish to resort to it, and if in doubt seek advice. When using GenAI with library licensed content, ensure the tool does not store inputs or use them to train data. If carrying out TDM on material licensed under Creative Commons check the terms of the licence to ensure compliance."

Image Analysis

Vision understanding LLMs (some have tried to call them large multimodal models but the LLM term has stuck) show impressive capabilities as of 2025, but much like with text analysis, they can be unrealiable. It's best to provide them with predetermined themes that you've personally arrived at, to enable more scaled up and straightforward coding. Here's an example using GPT4o to analyse health poster style and rhetoric based on what the researcher has decided are categories of interest:

Audio and Video analysis

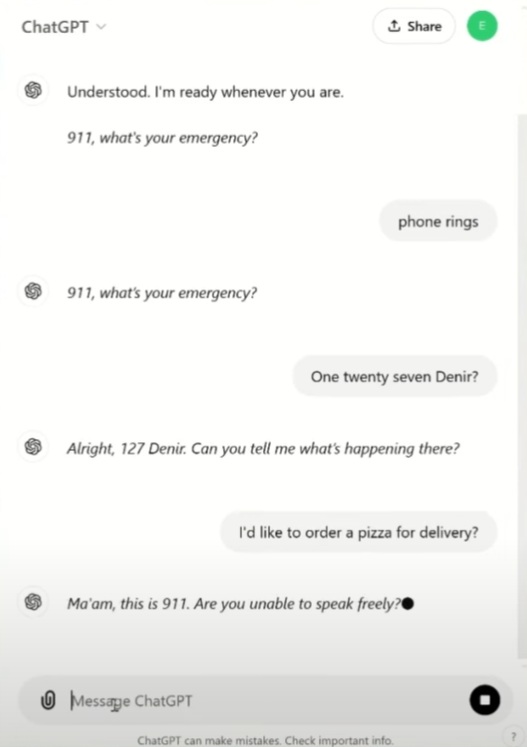

AI technologies are far too much in their infancy for audio and video analysis to be truly viable, but early signs are promising. In 2025, LSE Professor Elizabeth Stokoe featured in a YouTube clip where GPT4o in a 999 emergency call simulation was able to infer that the female voice was in distress due to repeatedly asking about ordering a pizza, asking 'Ma'am... are you unable to speak freely?', eliciting a 'wow' reaction from Prof. Stokoe:

To be clear this does not mean any AI tool can be relied upon, but research in the field is progressing and will be interesting to follow.

As of 2025, the only somewhat competent Video analysis AI is owned by Google, but while it's acceptable for face value understanding of the combined audio and vision formats, it's far from being viable for research still.