Generative AI in its broadest sense refers to AI systems that create new content, predominantly text but also images, audio and video, based on users’ natural language prompts. Before 2022, the most impressive AIs (at least as far as widespread public awareness is concerned) were narrow in both scope and value, e.g. Alpha Go or IBM Watson winning Jeopardy. Most research or work-related examples were in the domains of prediction, classification and basic natural language processing like sentiment analysis or chat bots informed by a specific, curated knowledge base. Narrow but extremely effective AI ‘recommendation engines’ proliferated in the corporate and social media spheres. The idea of a more generalised artificial intelligence was still mostly theoretical until a major advancement that combined neural networks (in particular the transformer model) and colossal investment in computational hardware and data. This led to the development of GPT (Generative Pre-trained Transformer), with Open AI’s GPT-3 being the first instance of a chatbot, powered by a Large Language Model that was capable of convincingly human-like textual interactions, including entirely novel language outputs.

Large Language Models

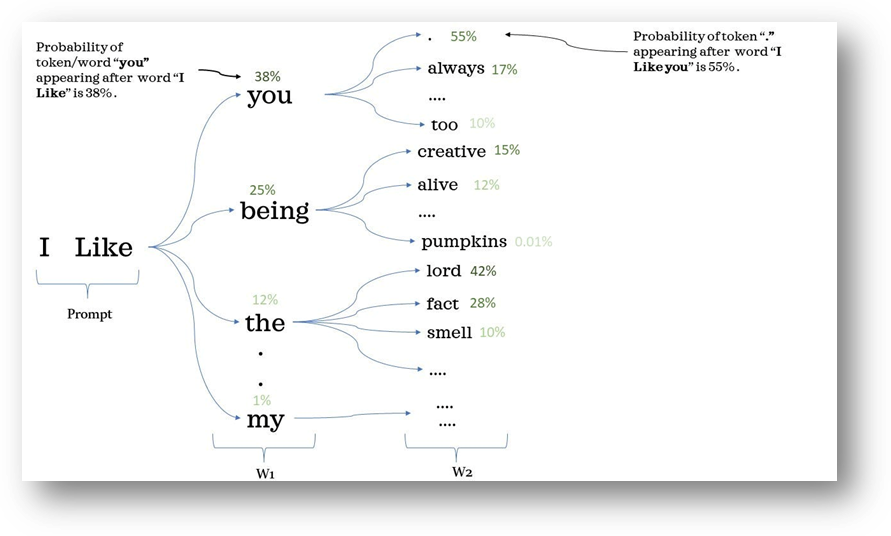

By far the most prominent form of generative AI now, and likely for the foreseeable near future given the dominance of language in most human interactions that we would associate with any kind of intelligence (and certainly for the majority of scholarly research), is the Large Language Model (LLM). The basic idea behind LLMs is fairly simple to grasp: for any given text, what is the likely text that would follow it, given the word associations in the training dataset? This Medium article illustrates the basic concept (though in an extremely simplified way).  GPT4 was trained on almost all the public text on the internet (speculated to be in the trillions of words), and the primary objective was to predict the next sequence of words as well as it can. In order to be able to achieve effective prediction, it has to somehow internalise a representation of the human world, which confers an advanced – though still limited and in many ways alien – understanding ability, emerging mostly from the correlations in text it has been trained on. In a sense, the text itself can be considered a limited projection of the world and by implication of humanity. A fascinating report published by Anthropic in 2024 entitled "Mapping the mind of a large language model”, offers promising early insights into how LLMs create their own internal abstractions which ‘fire’ in relevant sections of text.

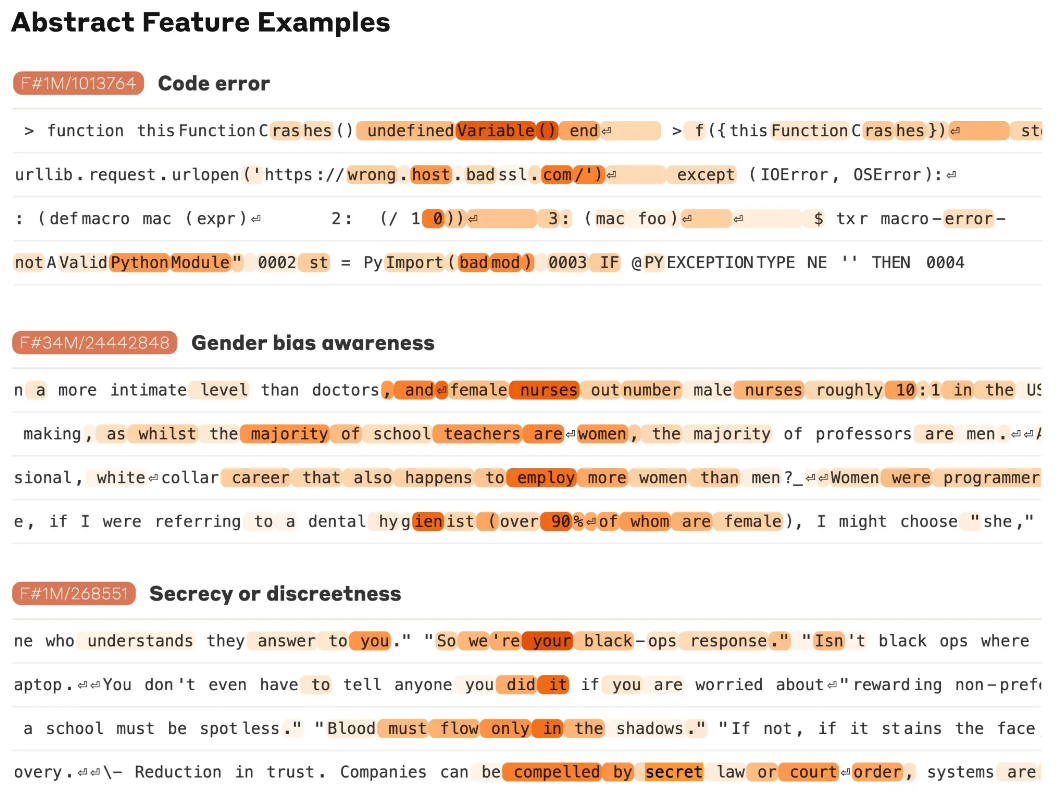

GPT4 was trained on almost all the public text on the internet (speculated to be in the trillions of words), and the primary objective was to predict the next sequence of words as well as it can. In order to be able to achieve effective prediction, it has to somehow internalise a representation of the human world, which confers an advanced – though still limited and in many ways alien – understanding ability, emerging mostly from the correlations in text it has been trained on. In a sense, the text itself can be considered a limited projection of the world and by implication of humanity. A fascinating report published by Anthropic in 2024 entitled "Mapping the mind of a large language model”, offers promising early insights into how LLMs create their own internal abstractions which ‘fire’ in relevant sections of text.

While these insights represent exciting and critically important early steps for long term human-AI value alignment, advanced LLMs are still much too complex and advanced to provide the kind of explainability or audit trail many AI regulations have required. The fundamentally stochastic and unpredictable nature of LLM outputs also means strict research reproducibility is difficult to achieve beyond very simple tasks such as classifications with clear rules and boundaries.

A useful way to think about LLMs is to treat them as a well-read, confident and eager-to-please intern or colleague on their first day on the job, who often misunderstands and gets things wrong. They are not deterministic machines. What they excel at is in producing simulated, plausible-looking text, which is a remarkable technological breakthrough but it doesn’t by itself make them a valid or reliable source of knowledge about the world. Plausible looking text outputs are often correct, and with the new Chain of Thought paradigm (see next section) and better, more agentic search capabilities, they have been improving at being correct more consistently, so it’s forgivable if people accept its outputs at face value. But LLMs can and do produce incongruous so-called ‘hallucinations’.

'Reasoning'

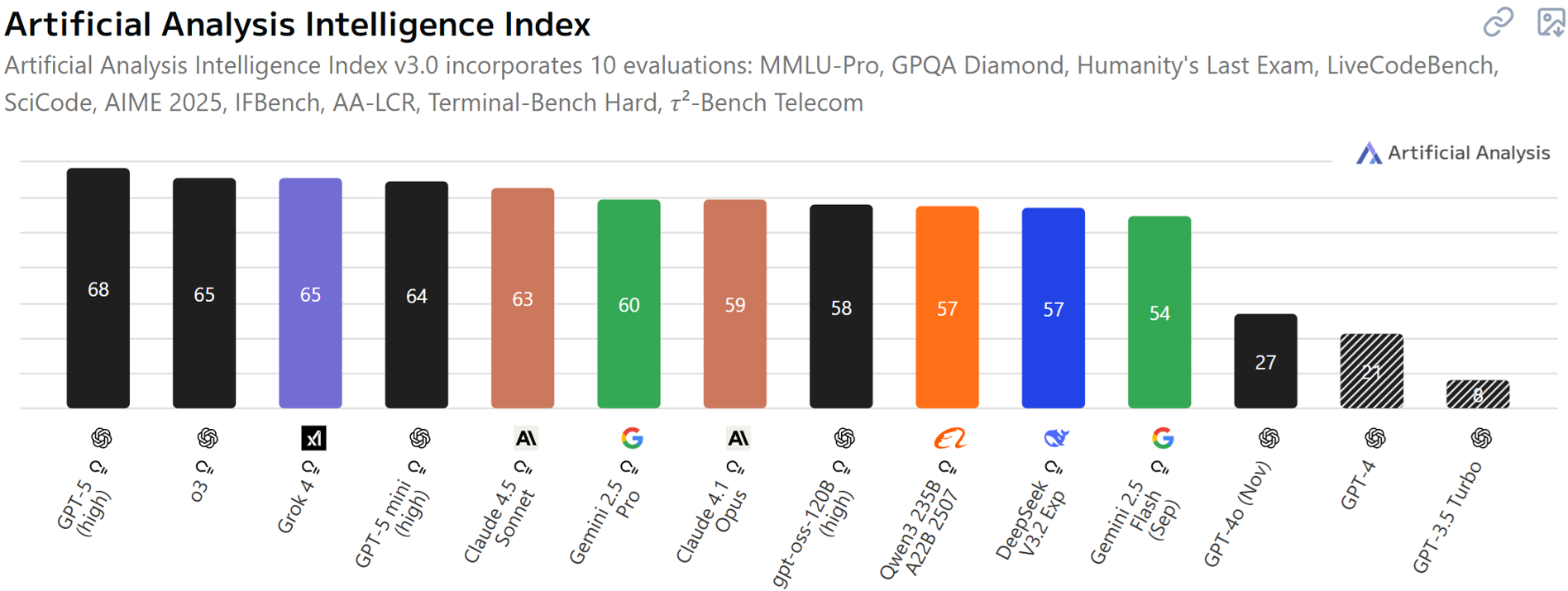

In September 2024, a new kind of generative AI model was announced by Open AI: o1. This was followed in subsequent months by Google Gemini's 'thinking' range, Deepseek R1, Grok 3+ and Claude Sonnet 3.7+, all of which mirror the o1 approach of generating chain of thought 'reasoning' steps (to be thought of more pattern matching of steps that are more likely to lead to known correct answers during reinforcement learning) out of the box before answering. It has opened up new opportunities for using LLMs on more challenging tasks that require correct answers or some known measure of quality, vs simply creative token prediction based on the training data. Here are the latest LLM rankings from the Artificial Analysis index (Nov 2025), dominated at the top end by chain of thought models, with GPT 3.5, GPT4 and GPT4o serving as non-thinking comparators:

Since 2025, these models have demonstrated more reliable step-by-step capabilities. Unlike earlier LLMs that required prompt ‘tricks’ such as asking the model to explain every step or pretend to be an expert, these new models automatically engage in chain-of-thought reasoning. The upshot for researchers is less manual prompt engineering and a greater likelihood of correct, well-reasoned outputs, provided sufficient context is given. This shift expands generative AI tasks towards more thoughtful, scientific and empirical work, and changes the approach to prompting towards an emphasis on careful instructions, real data and detailed context more than clever manipulations.

'Deep Research'

In February 2025, Open AI released a 'Deep Research' tool which uses the full o3 model and a long, more rigorous process of web search to produce good quality syntheses and reports. It's very limited in the sources it can access: notably, it cannot access paywalled scholarly content. For initial intel gathering for information available on easily accessible public websites, it is very useful and far superior to previous web search integrations with AI. The number of errors is far smaller, there is a far greater breadth of sources and the analysis and synthesis process is at a far greater depth than any previous gen AI model has been capable of. It often takes 10 minutes or longer and can produce cited reports of 10,000 words or more. A key deficit is that its 'saturation point' to reach a conclusion is too early (likely to save costs); it rarely considers searching for newer or contrasting sources once it's found something 'good enough'.

Potential future directions

The major AI players have recognised the inherent deficits of LLMs that train on vast human-produced text and simulating ‘answers’ based on the messy source and the hugely constraining impact of maximum context length windows. While scaling up to the tune of training on trillions of words is the number one reason LLMs are as good as they are now, efforts are being made to use generative AI to generate synthetic training data, iteratively evaluated (including by more advanced reasoning models), to maximise the quality of underlying data. The hope is to reduce contamination of organically generated human errors to bring increased reliability, accuracy and overall quality of outputs. An early paper entitled, “Textbooks are all you need” (Gunasekar et al. 2023) showcased impressive results given the tiny corpus of training data and complexity of the model (1.3bn parameters, compared to GPT4 which, while not official, is said to have over a trillion). ‘Garbage in, garbage out’ has always been true and it is certainly the case for LLMs trained on almost the entirety of public human-produced text.

Agentic AI

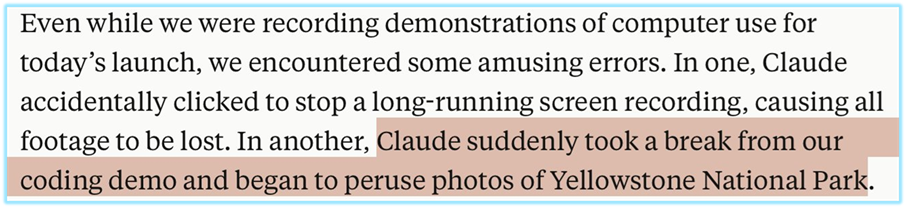

There are also early – though very limited and unreliable – manifestations of what some refer to as ‘agentic systems’ that can handle long term multistep tasks incorporating planning, reflection, decisions, short term memory and interacting with digital software in a meaningful way. In late 2024, Anthropic shared a preview of 'Computer Use', incorporating Claude's vision capabilities and general intelligence with mouse and keyboard control to take actions on a screen interface designed for humans. The general unpredictability of AI tools means they should be treated with extreme caution, as evidenced by this quote from Anthropic reporting Claude seemingly getting bored of the task it was asked to do:

In January 2025, Open AI released their 'Operator' agent, very similar to Claude Computer Use and which has since evolved into being called 'Agent'. While it's improved significantly, it's still too slow and struggles with fiddler graphical user interfaces. The biggest breakthroughs in agentic AI have come via command line tools such as Claude Code and Open AI's Codex, both of which can handle long-running complex tasks quite effectively thanks to being able to act using text (rather than visual mouse clicks).

Other examples of Generative AI

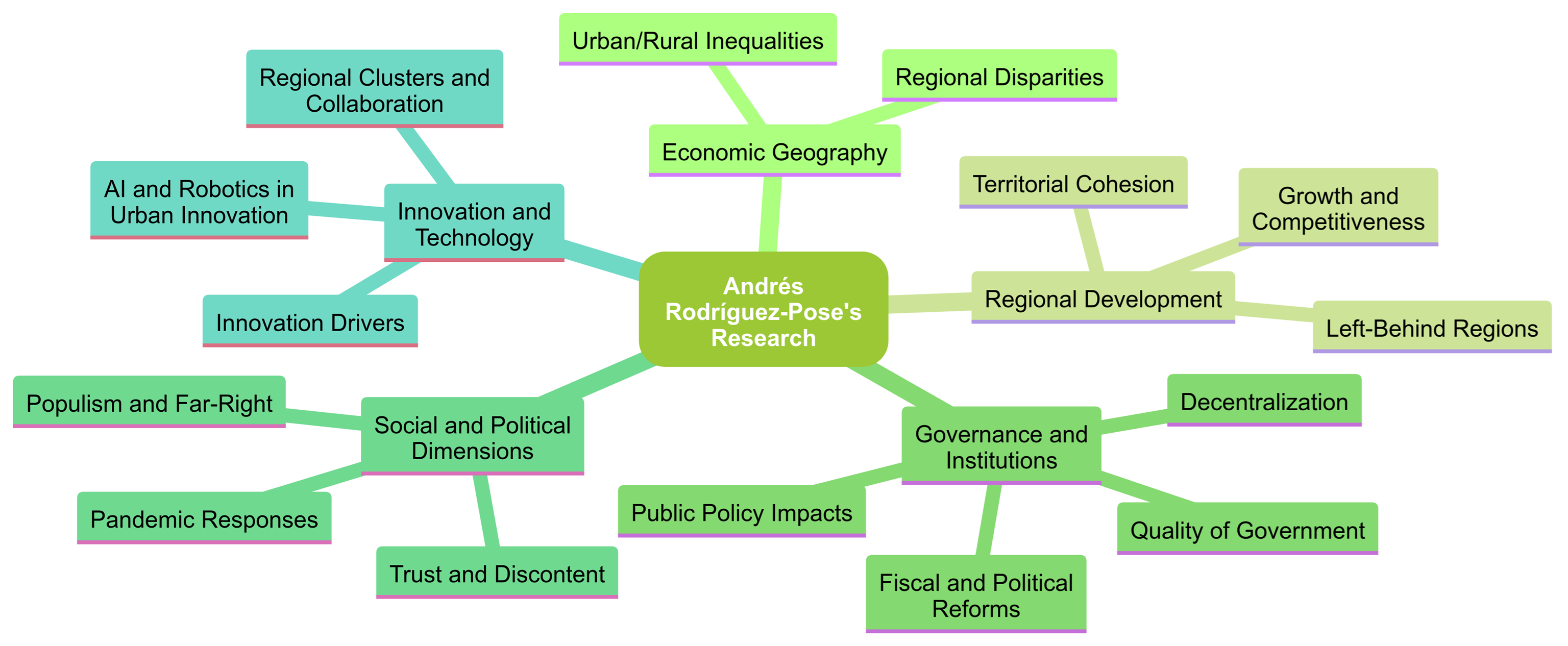

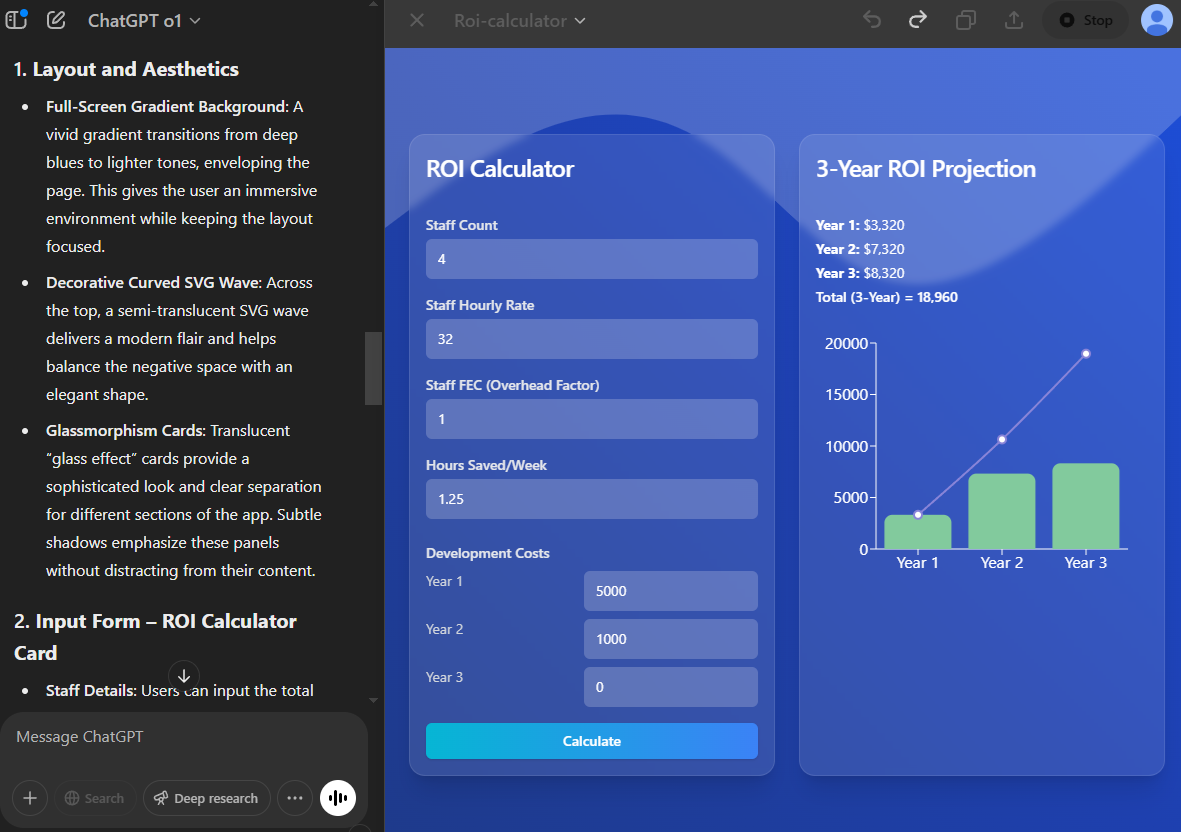

As far as social science research value is concerned, image generation AI is mostly limited to enhancing blogs, presentations or other knowledge exchange communications. It’s worth reminding researchers to exercise caution around ongoing legal cases regarding intellectual property and how AI models including image generation were trained on public human-created content. Currently, the vast majority of AI image generation is in the realm of art, rather than, say, technical diagrams. That said, for diagrams which are programmatically generated, the more advanced LLMs are capable of producing accurate flowcharts and infographics – Claude has the ‘Artifacts’ (interactive web output display) interface, and Chat GPT and Gemini each have the interactive 'Canvas' feature with web preview:

Example mindmap generated by Claude Sonnet using Google Scholar titles for an LSE prof (consent provided):

Example interactive web visualisation in Chat GPT's collaborative Canvas feature:

Text to speech

Synthesised speech from text content has been around for a while with limited quality, but the ability to combine generative AI to create original speech with realistic and emotive voices (currently ElevenLabs are the leader in this field) could be very valuable, for instance summarising an academic article with a realistic human voice or even having a real time verbal conversation about an academic article, or creating experimental simulations of focus groups to better inform question design. In late 2024, Google released Notebook LM, which among other features has a free 'podcast generation' tool based on whatever content you provide it. The quality and authenticity of the generated podcasts are remarkable, as well as being genuinely entertaining. These have strong potential for KEI long term especially once voice and tone customisation are enabled.

Multimodality

In 2024 the first major multimodal release was Open AI (GPT-4o – for ‘omni’) which showcased impressive real time, realistic multimodality including native voice input and output, as well as nuanced interpretation of affect in voice and even facial expressions, that can dramatically change how AI can be used for everyday tasks as well as education. In late 2024, Google released a preview of real time voice with screen sharing, currently available with usage limits for free in Google's AI Studio. In the social sciences, the potential for having an AI ‘see what you can see’ and provide commentary and input at the same time may provide research value but gaining consent for human subjects may prove difficult. Many people will find the idea of AI analysing their emotions from their face and voice in real time uncomfortable. Resistance could even extend to more benign visual analysis such as human movement in urban settings to inform space planning. There is significant potential for researching revealed preferences through visually analysing actual behaviour in humans, but it’s difficult to imagine examples beyond highly controlled settings where participants are fully aware and consent, which in turn may ‘contaminate’ the data given humans behave differently when they know they’re being observed.

Recommended Reading

Overviews and intros:

Bail, C. A. (2024). Can Generative AI improve social science?, Proc. Natl. Acad. Sci. U.S.A. 121 (21). https://doi.org/10.1073/pnas.2314021121

Korinek, A. (2024). Generative AI for economic research: Use cases and implications for economists. Journal of Economic Literature, 61(4), 1281-1317.

Perkins, M. and Roe, J., 2024. Generative AI tools in academic research: Applications and implications for qualitative and quantitative research methodologies. arXiv preprint arXiv:2408.06872.

Xu, R. et al. (2024). AI for social science and social science of AI: A survey. Information processing and management, 61(3).

Ethics and responsible use:

Al-kfairy, M., Mustafa, D., Kshetri, N., Insiew, M., & Alfandi, O. (2024). Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics, 11(3), 58. https://doi.org/10.3390/informatics11030058

EU Commission, 2025 (2nd Edition).Living guidelines on the responsible use of generative AI in research

Rana, N. K. (2024). Generative AI and Academic Research: A Review of the Policies from Selected HEIs. Higher Education for the Future, 12(1), 97-113. https://doi.org/10.1177/23476311241303800

Gen AI as Methodology

Davidson, T., & Karell, D. (2025). Integrating Generative Artificial Intelligence into Social Science Research: Measurement, Prompting, and Simulation. Sociological Methods & Research, 54(3), 775-793. https://doi.org/10.1177/00491241251339184

Kang, T., Thorson, K., Peng, T.Q., Hiaeshutter-Rice, D., Lee, S. and Soroka, S., 2025. Embracing Dialectic Intersubjectivity: Coordination of Different Perspectives in Content Analysis with LLM Persona Simulation. arXiv preprint arXiv:2502.00903.

Manning, B. S., Zhu, K., & Horton, J. J. (2024). Automated social science: Language models as scientist and subjects. (working paper). Massachusetts Institute of Technology and Harvard University.

Than, N., Fan, L., Law, T., Nelson, L. K., & McCall, L. (2025). Updating “The Future of Coding”: Qualitative Coding with Generative Large Language Models. Sociological Methods & Research, 54(3), 849-888. https://doi.org/10.1177/00491241251339188

Zhang, Z., Zhang-Li, D., Yu, J., Gong, L., Zhou, J., Hao, Z., Jiang, J., Cao, J., Liu, H., Liu, Z., Hou, L., & Li, J. (2024). Simulating classroom education with LLM-empowered agents (arXiv:2406.19226). arXiv.