While giving an AI agent autonomy to conduct in-depth research interviews entirely on behalf of the researchers via a dedicated chatbot interface is a terrible idea at the moment (not least because the quality and utility of follow-up questions won’t match an expert researcher), for particularly limited cases just to elicit basic, and more or less subjective, information, it’s theoretically viable and could be beneficial for scaling up qualitative interviews that permit limited follow-up questions. The primary barrier is the complexity involved (even using a low code chatbot design like Microsoft Copilot Studio) with creating a custom, focused chatbot that can be relied upon to stick to pre-determined lines of questioning, for which a developer would be needed.

An interesting project currently being undertaken at MIT by Professor Andrew Lo is designing the framework for a reliable, publicly accessible financial adviser that can provide suitable advice for any given individual. At a recent MIT event entitled “AI's Education Revolution: What will it take? Live Webcast” (7 May 2024), an audience member queried the issue of assessing an individual’s risk appetite which is critically important for advice offered, to which Prof. Low responded:

“…one of the things that we’re working on now is an interactive risk assessment that is an exploration with each individual. It’s kind of like a guided tour for an individual’s own psyche as to how they think about losses. It’s a series of questions and answers that where you’re asking not ‘pick A vs B’ or ‘pick C vs D’, but ‘why did you pick that? What did you like about it? What didn’t you like?’ And this is where LLMs come into play, you can actually engage in dialogue in ways that the very best financial advisers will do and the very worst financial advisers won’t do.”

Combined with LLMs’ capabilities for qualitative coding analysis given clear and explicit prompting, there’s certainly potential for acquiring higher quality qualitative responses (as opposed to pre-determined responses in quantitative surveys for instance) from humans with suitable follow-ups, without the usual associated barrier of significant time commitment for asking, transcribing and coding the interactions. As always you would never want to outsource everything to AI so a human in the loop is of course required throughout. And for unstructured in-depth interviews that require an expert researcher’s knowledge and skill in guiding the conversation in optimal ways for the research goals in mind, LLMs simply are not capable at this point in time but this may change as the technology advances.

Implementing via Microsoft Copilot Studio would require consultation, planning and design with DTS and comes at a cost, but here's a high level overview showcasing how it's possible to manage topics and dialogue trees using the low code interface based on Microsoft Learn's pages: Create and edit topics with Copilot Studio and Topics in Copilot Studio):

Create Topics and Triggers

- New Topics Creation: Each topic should represent a specific area of inquiry or a segment of the interview.

- Trigger Phrases: Define trigger phrases that will initiate these topics. These phrases should be simple and closely related to the questions or prompts you plan to use during the interview.

Design Conversation Paths

- Conversation Nodes: Use conversation nodes to design the flow of the interview. Nodes can ask questions, show messages, or perform actions based on user responses

- Question Types: You can have different types of questions (e.g., multiple-choice with options the user can click, or open-ended text responses) to gather both quantitative and qualitative data. Customise the responses based on user inputs to ensure relevant follow-up questions.

Managing Dialogue and Flow

- Branching Logic: Implement branching logic to handle different responses and avoid irrelevant tangents.

- System and Custom Topics: Use system topics for common interactions like greetings, goodbyes, and error handling. Customise as needed to fit the context of the interview topic and flows.

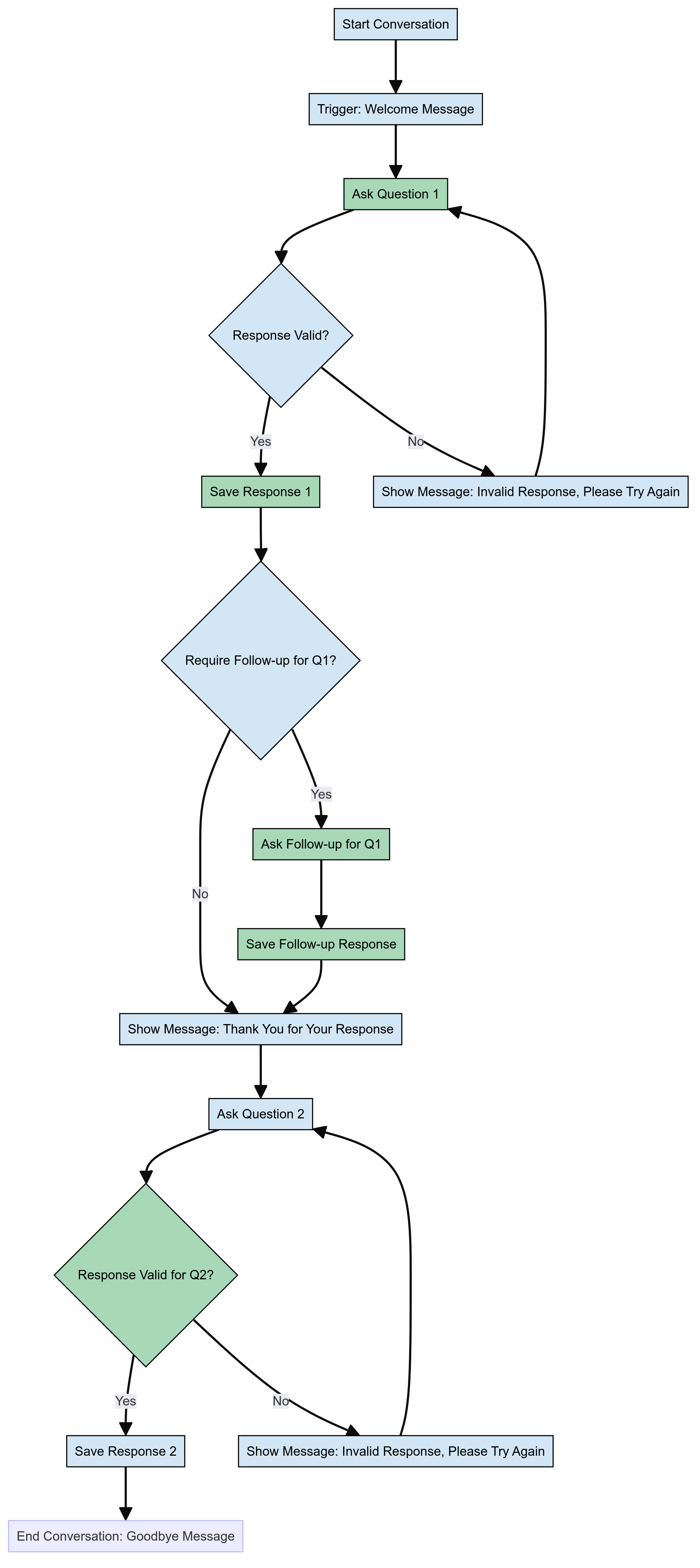

Here's a high level flowchart of how you might manage the logic of the interview (relying on a lookup database which determines which questions are open ended or multiple choice or simple inputs, which have follow-up options and which don't etc).:

Given the potential for chat bots to have a mind of their own (or be abused by users), strict logic rules, comprehensive error handling, content moderation and thorough testing would be required - these are all built-in features of Copilot Studio but technical expertise is still needed which will take time and combined with the running fees, consideration should be given so that the value (e.g. scale of potential participants) is worth the costs. It should go without saying that any such interview should make explicitly clear to the participant that they're talking to an AI and not a human, along with the usual information about how data is stored and used, so that informed consent is assured.