The best LLMs are highly effective at re-framing language, and the advanced (though still limited for more complex cases) capability means this also applies to explaining ideas. Because concepts don’t necessarily have to rely on empirical facts, hallucinations are less of a concern. The quality of output is measured primarily by how well the user subjectively understands the concept with which they were previously unfamiliar. A popular notion is "ELI5" (Explain like I’m 5) but this can be whatever you want. You could say “I’m an anthropology professor with a background in qualitative participant observation research. I need help understanding Concept X from Discipline Y which I'm struggling to understand. Can you please help me understand this by using language and analogies that I might find simpler to grasp?”.

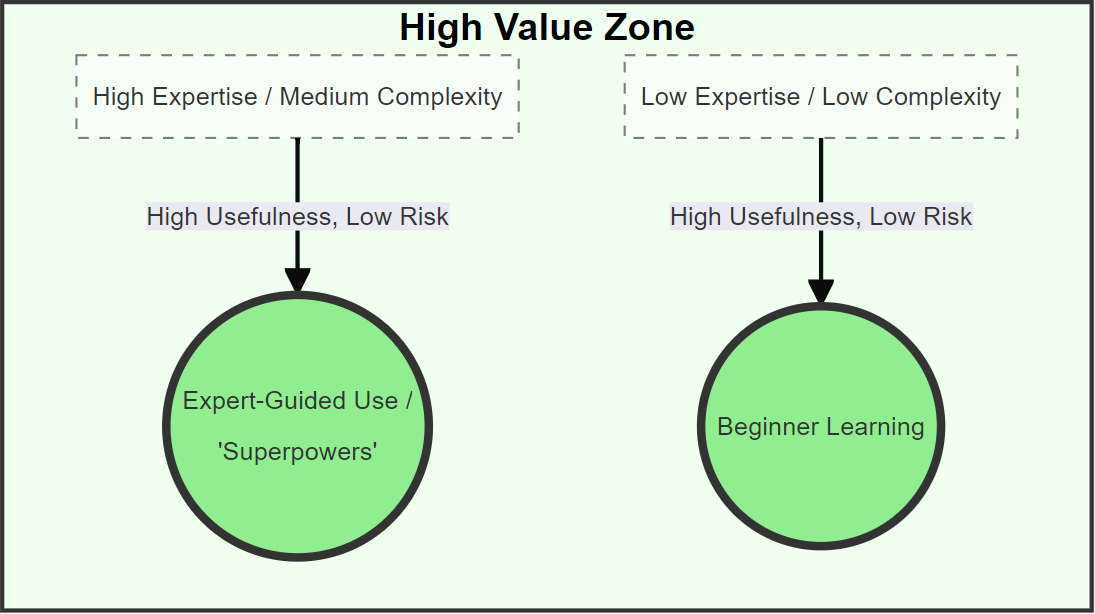

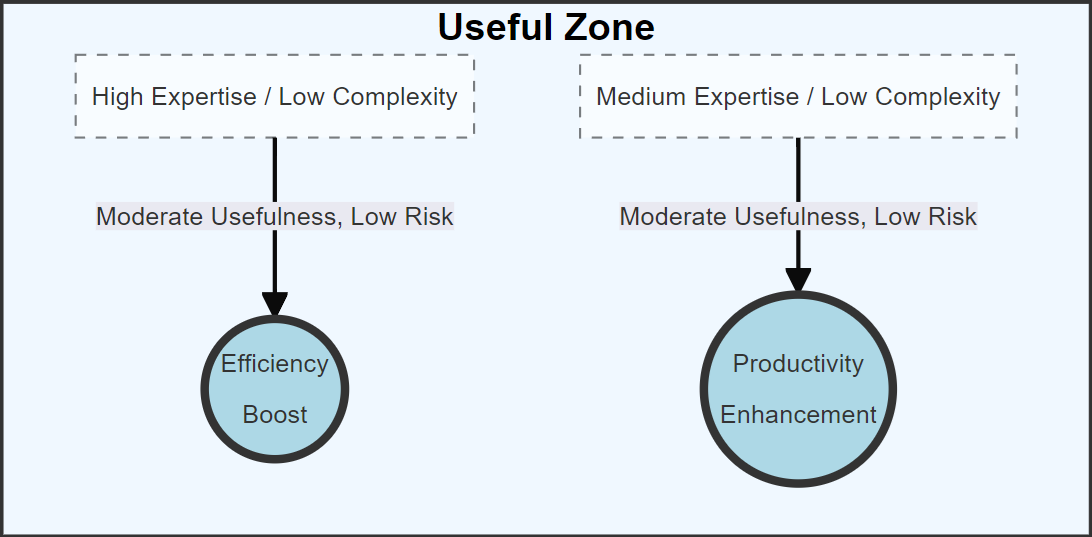

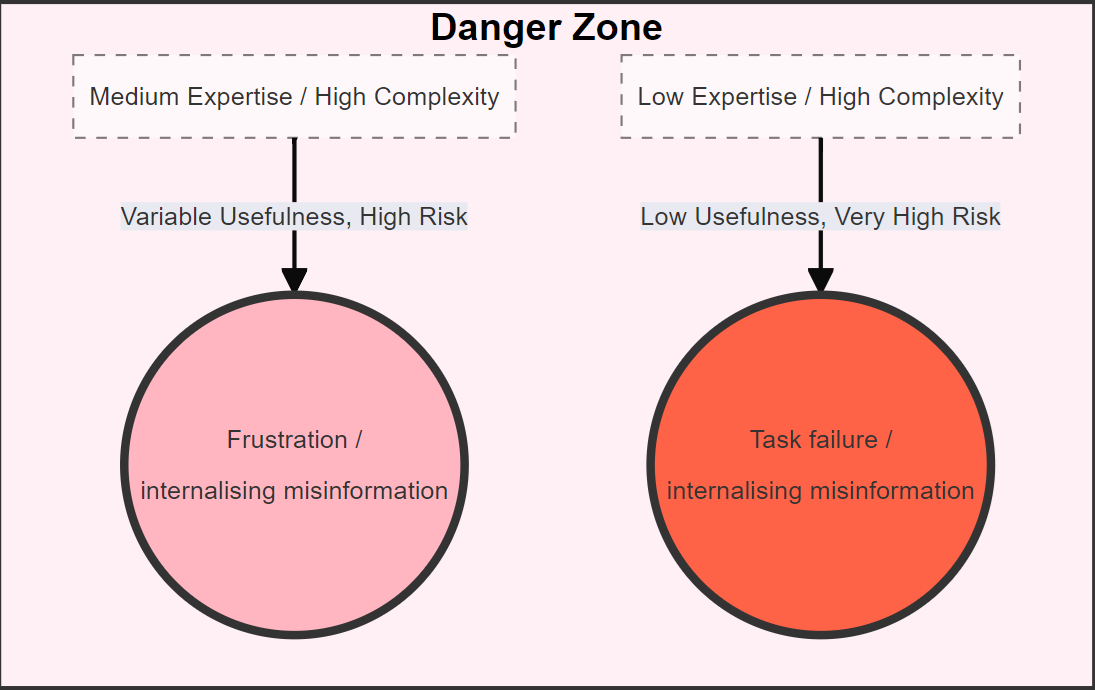

Ultimately this task is about simplifying and re-describing an unfamiliar idea using language, a tast at which the current most advanced LLMs perform well. But this doesn't mean it can function like the pill in the movie Limitless and bring the user to an advanced level of expertise without the usual requisite engagement and struggle to become an expert in anything. In fact, domain knowledge gained through engaged long-term learning is going to continue to be vital - the diagrams below demonstrate the contrast between the high value of LLMs for beginner-level learning and for guided productivity in advanced tasks in which the user is already an expert, but anybody attempting to be overly ambitious and bypass continued rigorous and engaged learning mechanisms will likely become frustrated:

So – as per the recurrent theme throughout these guidance pages - the value is as a helpful starting point, in this case that can support and accelerate beginner level learning. Nonetheless, we should not underestimate the value of LLMs in re-communicating ideas into any given format and with any given examples or analogies. The most advanced LLMs effectively have ‘all against all’ correlations for concepts and phenomena from the trillions of words in their training data. This means they can be very helpful at logically and plausibly synthesising different ideas and perspectives in ways that are more easily digestible for any individual, the user just still has to suggest the ideas in the first place.

To illustrate how versatile the underlying world model correlations are, here’s a deliberately frivolous example connecting 3 very disparate phenomena, the combination of which no human has hitherto explicitly thought about. This demonstrates the potential value of reframing ideas in a personalised way given parameters about your background and interests that you provide. It’s a strongly recommended approach for students and also experienced academics approaching a new topic or methodology (or indeed anybody looking to learn a new topic or skill):

Prompt:

Please humour me with this request and use your intelligence and training data connections to provide the best quality response:

I'm a lion pride leader and an international chess master. I'm interested in learning more about David Harvey's concept of 'spatial fix'. Given my personal background, knowledge and interests, please provide a personalised explanation of the concept using language and examples that I might better understand.

Response:

As a lion pride leader and international chess master, you're uniquely positioned to grasp David Harvey's concept of 'spatial fix'. Let me explain it in terms that should resonate with your experiences.

The 'spatial fix' is a strategy used in capitalism to solve economic crises by expanding or restructuring geographical space.

In your role as a pride leader, imagine your territory is becoming overhunted, and prey is scarce. You have two options:

1. Intensify hunting in your current area (which might lead to resource depletion)

2. Expand your territory into new, untapped hunting grounds

The second option is essentially a 'spatial fix'. You're solving a problem by geographical expansion.

In chess terms, it's like when you're in a cramped position, and suddenly you're allowed to expand the board, giving you new squares to manoeuvre your pieces. This new space allows you to avoid immediate defeat and continue the game.

For capitalism, this might involve:

1. Opening new markets (like a pride expanding its hunting grounds)

2. Finding new resources (discovering a new water hole in lion terms)

3. Relocating production to areas with cheaper labour (similar to a pride moving to an area with easier-to-catch prey)

However, just as expanding your pride's territory might lead to conflicts with other prides or eventual resource depletion in the new area, the 'spatial fix' in capitalism often only delays crises rather than solving them permanently.

In chess, even an expanded board eventually fills up with pieces, leading to new tactical challenges. Similarly, in global capitalism, as more of the world becomes integrated into the system, finding new 'spatial fixes' becomes increasingly difficult.

Does this explanation help connect the concept to your unique perspective? I'd be happy to elaborate on any aspect that interests you further.

A task like this can also be helpful when wanting to disseminate your research to a non-specialist audience. It can not only help you draft alternative ways to explain your research findings, but every now and then the examples it provides can genuinely open up how you think about the work (in which you have been so individually immersed) - from a different perspective.