Decision-makers are sensitive to uncertainty. Prudential regulation in finance is a good example. In general, the more uncertain a financial institution is about its risk exposures, the more capital it needs to hold. But what if a financial institution is uncertain about how uncertain it is about its risks? LSE Philosophy PhD student Kangyu Wang argues that the increasing use of AI models in finance, while expectedly going to improve the accuracy and cost-efficiency of risk assessment, poses two uncertainty-related challenges against the current prudential regulation system. These difficulties highlight a more general problem regarding uncertainty: what to do when we are uncertain about how uncertain we are?

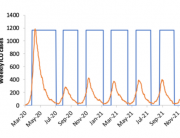

Prudential regulation aims to ensure that financial institutions are financially safe. The increasing use of AI models in finance leads regulators to consider the potential impacts AI might have on financial stability. In May 2024, two external members of the Bank of England’s Financial Policy Committee, Jonathan Hall and Randall Kroszner, discussed the possibility that prudential regulators might need to consider measures such as stress testing, raising the countercyclical capital buffer rate, and market interventions in response to the potential reduction of market stability due to the use of AI in trading.[1] [2] Across the pond, in June 2024, US Secretary of the Treasury Janet Yellen said at the Financial Stability Oversight Council (FSOC) Conference on Artificial Intelligence and Financial Stability that,

Specific vulnerabilities may arise from the complexity and opacity of AI models; inadequate risk management frameworks to account for AI risks; and interconnections that emerge as many market participants rely on the same data and models.[3]

In July 2024, Piero Cipollone, a member of the Executive Board of the European Central Bank also made some similar observations.[4]

I am sympathetic to those concerns, but I argue that two problems have been overlooked so far. These problems concern the core of prudential regulation, capital requirements. Financial institutions need to hold enough capital to absorb losses and survive challenging conditions. The increasing use of AI in finance makes it hard for financial institutions to calculate their capital requirements in two ways.

How is uncertainty priced in prudential regulation?

In finance, uncertainty has a price, at least when it is measurable. For example, under Solvency II and similar regulatory frameworks, insurers and reinsurers need to predict the probability distributions of claim expenditures. Under Basel III, banks need to predict the probability distributions of loan losses.[5] These are just a very small part. Financial institutions have to assess and manage many other risks.

Critically, many of those predictions come with probability distributions that allow the modellers to calculate confidence intervals. For instance, under Solvency II, insurers are required to ensure a 99.5% probability of adequacy over one year. That is, they must hold enough capital to absorb losses of all kinds over one year with a corresponding confidence interval so that they will only be in danger in the “worst 0.5%” scenario. If an insurer does not know the probability distribution of claim expenditures of a major product it sells, it will be unable to work out how much capital it needs to hold.

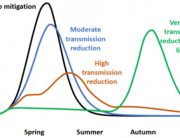

Two different types of risks must be differentiated. Some risks are “tail” risks, others are “normal” or “non-tail”. Common tail risks include catastrophe risks, extreme market movements, and other rare events. For example, consider earthquakes. The probability that there will be a destructive earthquake in, let us say, Tokyo in the coming 12 months is not high. But if there is one, the loss will be huge. It is extremely uncertain how large this probability is, and also extremely uncertain how huge the loss will be if there is one. When insurers and reinsurers underwrite this risk, they need to somehow determine how uncertain they are about their prediction and reflect that in the amount of capital they reserve.[6]

An example of “non-tail” risk is the credit risk taken by a bank when it makes loans. There is a risk for each borrower to default and different people have different probabilities of default. When a bank lends money to a large number of borrowers, the bank can apply the Law of Large Numbers (LLN) to model their credit risks. LLN ensures that the estimators computed from the observed data points converge to the true values of the estimated parameters as the number of data points used increases, and we know exactly how uncertain we are given a specific number of data points. This guarantees that when a bank properly estimates that its loan losses will not go above a certain number with a 95% confidence interval, there is indeed only a 2.5% chance that this estimated value is below its actual loan losses.

How can AI models make things different?

AI can be used to assess and manage both kinds of risk. A reinsurer may use an AI model to predict the probability of a Tokyo earthquake and calculate the premium or assist the human pricing and underwriting team to do so. A bank may use an AI model to predict the probability of default of a loan applicant and directly make decisions on their loan application or assist human credit officers to do so. The industry hopes that AI models can be more accurate and more cost-efficient than humans in assessing risks. Although this process is just starting, for the sake of argument, we can take those advantages of AI for granted.

However, there are some problems.

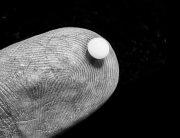

The first problem concerns tail risks, for instance, earthquakes. AI models are not based on statistics. When an AI model makes a prediction, it usually does not give any confidence interval. In Machine Learning research, this is known as the uncertainty quantification (UQ) problem. There is no established way to quantify the uncertainty of AI predictions. If the reinsurer uses an AI model to predict the probability of a Tokyo earthquake, it can make a prediction, but it cannot tell us how likely it is for this prediction to miss the real probability by how much with mathematical rigorousness. Of course, one can train an AI model to provide an interval that looks like a confidence interval, but it is not. In statistics, given a required level of confidence, the confidence interval of an estimation is completely determined by the observed data. An AI-generated interval around an AI-generated prediction, however, is itself an AI-generated prediction with no mathematical proof behind it. This is like asking a person to guess how good their guess is.

The UQ problem occurs partly because of the curse of dimensionality, partly because of the black-boxed nature and the complexity of AI models.[7] Some nice mathematical results that hold in low-dimensional cases do not hold in the world of high-dimensionality. And that is what we must do when we train and use AI models. Many of them can easily have billions or trillions of dimensions and parameters. In other high-stake areas like medical science[8] and drug design[9], the UQ problem has been well-acknowledged. In finance, however, this problem is discussed much less.[10] There might be a silver lining: some progress on the UQ problem has been made in Machine Learning research. For instance, some researchers try to apply Bayesian statistical methods to provide a measure of uncertainty. However, many researchers think that the problem remains unsolved, if not unsolvable. We will not see a good solution in the financial context any time soon.

The second problem concerns non-tail risks, for instance, credit risks. The UQ problem still will exist in each AI-generated prediction of the probability of default of a loan applicant, but this matters in a different way here, because no single loan can threaten the bank’s adequacy. Since there are a large number of loans made to a large number of borrowers, the bank can build a statistical model for these AI-generated predictions and calculate the probability distribution of its loan losses according to the AI model. Such a model can, if the bank wants, provide a 95% confidence interval for the estimated AI-predicted loan losses. However, this does not solve the bank’s problem. What the bank needs to know is not how certain we are about what the AI model predicts about the probabilities of default of the borrowers, but how certain we are about the true probabilities of default of the borrowers.

To put it another way, the problem is the uncertainty about model bias. The bias of AI models is a well-known problem in the financial service industry and philosophy alike, but more so in the context of inequality or discrimination than in the context of prudential regulation. If a bank’s AI model is biased in a reckless way, the bank can be in a huge problem. Of course, the bank can always re-train its AI model and make it produce sufficiently unbiased or conservative predictions. But even so, there is no mathematical guarantee that a model that performs well with the training and testing data sets will remain so when dealing with new data, nor is there an established way to quantify how biased it could be or how much capital has to be reserved for each “unit” of potential biasedness.

Where do we go from here?

US Secretary of the Treasury Janet Yellen in her abovementioned speech refers to the FSOC’s 2023 Annual Report which states that,

This lack of “explainability” can make it difficult to assess the systems’ conceptual soundness, increasing uncertainty about their suitability and reliability.[11]

Unlike the US regulators, I think that while the problems I highlight are about uncertainty, it is not clear whether the use of AI will actually increase uncertainty. What I am arguing instead is that the use of AI means there is no rigorous or even consensus way to measure, quantify, or represent the degree of uncertainty about financial risks.

This does not mean that there must be more risk or uncertainty overall because of the use of AI in finance. We cannot even compare. It may very well be the case that the use of AI makes the bank and the reinsurer financially “safer”. But we can neither confirm nor deny this without enough empirical data and this kind of “safety” is undefined in the current prudential regulation system. This is why I identify the two challenges I discuss as challenges to prudential regulation rather than challenges to AI for finance per se.

It is not clear what regulators should do. Policymakers should be sensitive or even averse to uncertainty, according to some philosophers[12] and economists[13]. But this does not tell us what to do about the uncertainty regarding the measure of uncertainty or how to weigh that against the accuracy of risk assessment. One may think that an additional capital buffer should be introduced in response to the uncertainty of AI models. However, the problem is precisely that we do not know how to quantify this uncertainty and hence do not know how to calculate this buffer. One huge benefit of using AI models is that thanks to their accuracy, it will hopefully allow financial institutions to take and profit from some risks that they would otherwise not be able to take. This will not only benefit the financial industry but also unleash huge economic opportunities. A very “safe” buffer could make it economically unworthwhile for financial institutions to invest in such innovations.

More generally, it is not clear what we should do when we realise that we are not only uncertain about something but also uncertain about how uncertain we are about it. For centuries, we have been relying on traditional statistical tools which, though not perfect, provide some established ways to measure uncertainty. If we are going to rely more and more on AI, we’d better find an alternative solution as quickly as possible.

By Kangyu Wang

Kangyu Wang is a PhD student at the Department of Philosophy, Logic and Scientific Method at LSE. His areas of interest include moral and political philosophy, philosophy of economics, rationality and practical reason.

Notes

[1] https://www.bankofengland.co.uk/speech/2024/may/jon-hall-speech-at-the-university-of-exeter

[2] https://www.bankofengland.co.uk/speech/2024/may/randall-kroszner-keynote-address-at-london-city-week#:~:text=Change%20is%20already%20occurring%20in,using%20or%20developing%20ML%20applications.

[3] https://home.treasury.gov/news/press-releases/jy2395

[4] https://www.ecb.europa.eu/press/key/date/2024/htmll/ecb.sp240704_1~e348c05894.en.html

[5] Laeven, L., & Levine, R. (2009). Bank governance, regulation and risk taking. Journal of financial economics, 93(2), 259-275; Acharya, V. V., et al. (2010). Manufacturing tail risk: A perspective on the financial crisis of 2007–2009. Foundations and Trends® in Finance, 4(4), 247-325; Boonen, T. J. (2017). Solvency II solvency capital requirement for life insurance companies based on expected shortfall. European actuarial journal, 7(2), 405-43; Basel Framework: https://www.bis.org/basel_framework/index.htm

[6] Bernard, C., & Tian, W. (2009). Optimal reinsurance arrangements under tail risk measures. Journal of risk and insurance, 76(3), 709-725; Cummins, J. D., Dionne, G., Gagné, R., & Nouira, A. (2021). The costs and benefits of reinsurance. The Geneva Papers on Risk and Insurance-Issues and Practice, 46, 177-199; Bradley, Richard (2024) Catastrophe insurance decision making when the science is uncertain. Economics and Philosophy. ISSN 0266-2671 (In Press).

[7] Crespo Márquez, A. (2022). The Curse of Dimensionality. In: Digital Maintenance Management. Springer Series in Reliability Engineering. Springer, Cham. https://doi.org/10.1007/978-3-030-97660-6_7; Tyralis, H., & Papacharalampous, G. (2024). A review of predictive uncertainty estimation with machine learning. Artificial Intelligence Review, 57(4), 94; Mehdiyev, N., Majlatow, M., & Fettke, P. (2024). Quantifying and explaining machine learning uncertainty in predictive process monitoring: an operations research perspective. Annals of Operations Research, 1-40.

[8] Begoli, E., Bhattacharya, T., & Kusnezov, D. (2019). The need for uncertainty quantification in machine-assisted medical decision making. Nature Machine Intelligence, 1(1), 20-23.

[9] Mervin, L. H., Johansson, S., Semenova, E., Giblin, K. A., & Engkvist, O. (2021). Uncertainty quantification in drug design. Drug discovery today, 26(2), 474-489.

[10] Akansu, A. N., Kulkarni, S. R., & Malioutov, D. (2016). Overview: Financial signal processing and machine learning. Financial Signal Processing and Machine Learning, 1-10; Rundo, F., Trenta, F., Di Stallo, A. L., & Battiato, S. (2019). Machine learning for quantitative finance applications: A survey. Applied Sciences, 9(24), 5574; Warin, T., & Stojkov, A. (2021). Machine learning in finance: a metadata-based systematic review of the literature. Journal of Risk and Financial Management, 14(7), 302; Sahu, S. K., Mokhade, A., & Bokde, N. D. (2023). An overview of machine learning, deep learning, and reinforcement learning-based techniques in quantitative finance: recent progress and challenges. Applied Sciences, 13(3), 1956.

[11] https://home.treasury.gov/system/files/261/FSOC2023AnnualReport.pdf, 91-92.

[12] Rowe, T. and Voorhoeve, A., 2018, “Egalitarianism under Severe Uncertainty,” Philosophy and Public Affairs 46: 239–68. Roussos, J., 2020, “Policymaking under scientific uncertainty,” PhD thesis, The London School of Economics and Political Science. Bradley, R. and Stefásson, O., 2023, “Fairness and risk attitudes,” Philosophical Studies 180 (10-11):3179-3204. See also Buchak, L., 2017, “Taking Risks behind the Veil of Ignorance,” Ethics 127(3): 610-644.

[13] Then-member of the Monetary Policy Committee of the Bank, former White House economic advisor, MIT Sloan economist Professor Kristin Forbes gave a speech in 2016 on “Uncertainty about uncertainty”, https://www.bankofengland.co.uk/-/media/boe/files/speech/2016/uncertainty-about-uncertainty.pdf

Connect with us

Facebook

Twitter

Youtube

Flickr