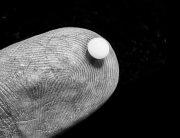

Research into the minds of other animals and particularly invertebrates raises questions about how we define and understand consciousness itself. LSE Philosophy PhD student Daria Zakharova discusses how creating an artistic interpretation of the mind of a spider can inspire new legislation and shed light on how we understand developments in new forms artificial of intelligence.

In the summer of 2023, myself and an interdisciplinary team, built a giant sculpture of a spider, in the northern desert of Spain. Our spider was modelled after Portia – a genus of jumping spider known for its excellent vision and sophisticated hunting behaviour that has fascinated researchers for decades.

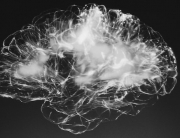

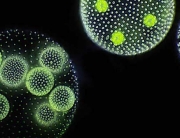

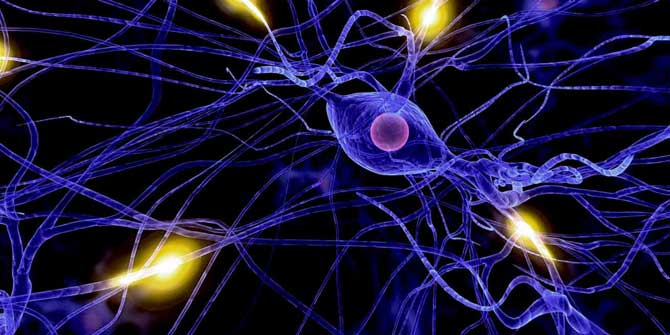

The installation invited visitors to physically get inside the head of Portia to discover an artistic meditation on the mind of an arthropod. They would enter the sculpture through an opening in the back of its head, walk briefly through a dark corridor and find themselves in a space representing the inside of Portia’s mind. Between its two giant eyes, glowing with lights running in intricate patterns, original music paired with the creative coding of the lights immersed the visitor into a meditation on the spider’s subjective experience.

We called the installation “In Search of Spider Consciousness” an allusion to my supervisor, Jonathan Birch’s paper “The Search for Invertebrate Consciousness”, which accesses several approaches to studying signs (or markers) of consciousness scientifically in invertebrates, animals without a backbone, such as insects and octopuses and to my ongoing research on spider consciousness.

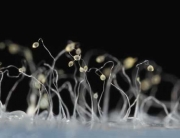

Over 90% of all animals on our planet are invertebrates, yet these are animals whose minds, cognitive abilities and capacity for conscious experiences we underestimate. We tend to think more about dogs, cats or monkeys as having subjective experiences, rather than shrimps, crabs or bees.

Correcting this deficit has been a major focus of the Foundations of Animal Sentience Project (ASENT) at LSE. Whilst this may seem a purely theoretical pursuit, the project has brought about collaborations between biologists and philosophers and has led international discussions on animal welfare and policy. Notably by shaping the UK’s Animal Welfare (Sentience) Act 2022, which extended to protection from inhumane treatment beyond the vertebrates previously covered to certain invertebrates.

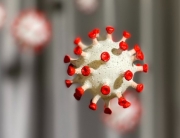

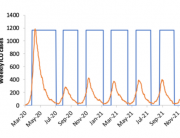

This change to UK law recognises cephalopods (such as octopuses and squid) and decapod crusteceans (such as crabs, lobsters and shrimps) as sentient – capable of having positive and negative experiences, pain and suffering being of special interest to the issues around their welfare. However, there are many more invertebrates not legally recognised as sentient. For instance, bees show behaviours strongly suggesting markers of sentience, but are not (yet) covered under the Sentience Act.

The problem of human, animal and other minds

When we talk about conscious or sentient experiences we usually mean that there is something that it feels like to be the subject having the experiences. One difficulty with understanding others’ experience is that we can never have them directly, we can never literaly look into someone else’s head. This is known as the problem of other minds. While some take this to mean that we can never be truly certain of whether other humans are conscious, let alone other animals, the majority agree that we have good reasons to think that other humans are conscious. In studying animal cognition from a philosophical perspective, we are making the case that there are scientifically respectable reasons to think that many other animals share conscious experiences as well, and that these should be factored into their relationships to humans.

A particular difficulty concerns how non-human minds should be studied and how findings ought to be interpreted. For instance, spiders, such as our Portia, have been studied extensively for their sophisticated behaviour. This intense study has resulted in ongoing significant disagreement over whether a certain behaviour, paired with what we know about the spider’s brain, should be taken as signifying intelligence, rather than a “merely” hardwired, if somewhat sophisticated, mechanism.

Debates of this type might ring familiar to people who have been following developments in AI. Many argue large language models are able to display “true” intelligence and may be capable of conscious experience, while others are skeptical of anything of interest being at play in a “mere” next-token-predicting algorithm. The disagreement in both these cases concerns less what is being directly observed in a lab and more what we take our most scrupulous observations to show. How then are these findings to be categorised in accordance with what we know and understand human and non-human minds to be?

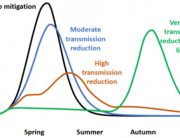

A lot of it comes down to philosophical considerations, for example that pain is a subjective experience and requires consciousness to be felt, or that pain is morally significant when making decisions about how to treat an animal, or what we actually mean when we say that an animal or a machine is intelligent. Is successfully mimicking human behaviour a sign of true intelligence and consciousness or lack thereof? Critically engaging with and rigorously developing such philosophical commitments and our understanding of animal and artificial minds can significantly shape our relationship to (more distant species of) animals and AI in terms of future policy. At the same time, AI may also influence how we understand animal minds, for example in widening our ideas about the meaning of intelligence and the various ways it could be realised in non-humans. Research on non-human minds therefore provides a resource needed to help steer these debates and to help establish meaningful directions for further scientific investigation.

From philosophy to art to impact

Besides artistic self-expression, this project has been motivated by the desire to illuminate a complex, transdisciplinary and novel subject in science and philosophy of mind and the importance of fostering deeper curiosity about diverse forms of minds and consciousness coinhabiting the world with us.

The philosophy of Portia, provides a point of coherence for scholarly, artistic and popular engagement (even if The Economist took a less cautious view of this work than I advocate). Building this arachnid art installation brought together creative coders, composers, engineers, and designers to think about the ways of imagining and artistically representing a strange and largely undiscovered mind of an invertebrate. This interest has endured and a new display of Portia can be found at the LSE Festival this year.

Finally, “In Search of Spider Consciousness” is set to continue. One project currently in the making is exhibit in Portia in a museum alongside an array of other installations designed to teach children to think about animal minds through science and philosophy-inspired art. As my work on possible markers of sentience in invertebrate and artificial minds progresses, hopefully, in time, it will also help advance and shape legislation and ways of thinking that reframe the human to non-human relations of the future.

By Daria Zakharova

Daria Zakharova is a PhD student in the Department of Philosophy, Logic and Scientific Method at LSE. She is interested in biological and artificial minds.

Credits

This article has originally been published on the Impact of Social Science Blog of the London School of Economics and Political Science.

Very interesting idea. It’s extremely hard to imagine how does it feel to be a spider, to look at the world through its eyes, but art can certainly help with that. I’m looking forward to more frequent joint ventures between philosophy and art. I’m also skeptical about such endeavors, mainly because we still don’t know exactly what consciousness is and what generates it. So, we cannot really tell if spider is conscious in any meaningful way.