The world recently lost Daniel Ellsberg, famous for leaking the Pentagon Papers in 1971. Ellsberg will be remembered within philosophy for a paradox in decision theory. Nikhil Venkatesh explores the connection between this paradox and his momentous decision to leak the Papers.

Ellsberg’s Life

Many philosophers have put themselves on the wrong side of the authorities. At the birth of western philosophy, Socrates was executed by the city-state of Athens; Hypatia was murdered by a mob. Since then, Boethius, Russell and Gramsci have written philosophy from prison. Marx’s tussles with governments forced him to flee from Germany to France, from France to Belgium and then to London. Amongst living philosophers, Angela Davis has been jailed and tried (and acquitted) for murder, and David Enoch was recently arrested for protesting against the Israeli government.

June 2023 saw the death of Daniel Ellsberg. Ellsberg is best-known as the military analyst who leaked ‘the Pentagon Papers’ in 1971. The Papers exposed the fact that the American government and military had been lying to the public about the state of the war in Vietnam. Ellsberg was tried for espionage, theft and conspiracy. He expected to spend the rest of his life in prison. However, the Nixon administration’s heavy-handed tactics in trying to silence and discredit him led the judge to declare a mistrial, leading to Ellsberg’s freedom and, indirectly, to Nixon’s downfall in the Watergate scandal.

Ellsberg’s Paradox

Earlier in his life, Ellsberg made a significant contribution to philosophy, in the area of decision theory. Decision theory deals with how we ought to make choices when we are uncertain about how things will turn out. The standard account is known as ‘expected utility theory’ (EUT). According to EUT, the rational agent evaluates an option by calculating the good that will come about in different possible outcomes, and multiplying it by the probability that those outcomes will occur, if they choose that option. The right choice is the option the one with the highest number. Take the following example:

An urn contains 70 red balls and 30 black balls. I blindfold you and offer you a bet: if you draw a red ball from the pot, I give you £10. If you draw a black ball, you give me £12.

Should you take the bet? Here’s what EUT says.

Choice 1: Reject the bet. If you do this, the outcome, with 100% probability, is that you win no money, but you lose no money. Let’s say this outcome is worth 0 to you. The expected utility is 0 x 100% = 0.

Choice 2: Take the bet. If you do this, there are two possible outcomes. With 70% probability, you win £10. With 30% probability, you lose £12. Assuming that you only care about how much you win, the expected utility is (10 x 70%) + (-12 x 30%) = 7 – 3.6 = 3.4.

3.4 is greater than 0, so you should take the bet, according to EUT.

So far, so good for EUT. But Ellsberg came up with a problem for it – known to decision theorists as ‘the Ellsberg Paradox’. Imagine the following example:

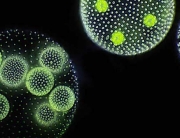

An urn contains 90 balls. You know that 30 of them are red, and the rest are black and yellow, but you don’t know how many are black and how many are yellow. I blindfold you and offer you the following bets.

Bet A: £10 if you draw a red ball, £0 otherwise.

Bet B: £10 if you draw a black ball, £0 otherwise.

Bet C: £10 if you draw a red or yellow ball, £0 otherwise.

Bet D: £10 if you draw a black or yellow ball, £0 otherwise.

These are all ‘one-way bets’ – you can’t lose anything – so they’re all better than not betting at all. But how do they rank against one another? Most people rank Bet D highest, then Bet C, then Bet A, then Bet B. EUT agrees that C and D are better than A and B. But EUT can’t explain how someone could prefer D to C and also prefer A to B. Preferring D to C only makes sense, for EUT, if you think there are more than 30 black balls. But if you think there are more than 30 black balls, then you should prefer B to A. (I’ll let you do the maths on this one.)

What Ellsberg and others take from this case is that we are ‘ambiguity averse’ (though see Chapter 9 and Chapter 13 of this, by LSE’s Richard Bradley). We prefer to know for sure, in Bet A, that we have a 1/3 chance of winning, and to know for sure, in Bet D, that we have a 2/3 chance. We might estimate that we also have a 1/3 chance in B and a 2/3 chance in C. But, as well as preferring to bet on higher probabilities than lower ones, we also prefer to bet on probabilities we have evidence for than on probabilities we simply guess at. EUT, by contrast, tells us to choose based on what we think will happen, whether what we think is based on evidence or estimates. So, Ellsberg and others say, we need a decision theory that departs from EUT, one that puts greater weight on what we know.

Ellsberg’s decision

In the rest of this post, I want to apply Ellsberg’s philosophical insight to the momentous decision he took to leak the Pentagon Papers. The first thing to notice is that Ellsberg could simply have not taken the bet. Even once he’d decided that the war was wrong, he could have kept silent. Many did. As he himself said:

“a very large range of high-level doves thought we should get out and should not have got involved at all. They were lying to the public to give the impression that they were supporting the president when they did not believe in what the president was doing. They did not agree with it but they would have spoken out at the cost of their jobs and their future careers. None of them did that or took any risk of doing it and the price of the silence of the doves was several million Vietnamese, Indochinese, and 58,000 Americans.”

This, then, was the calculation Ellsberg had to make. Would he take the cost of his career in order to stop the war?

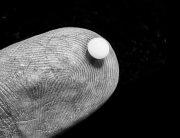

Let’s think through the problem as a decision theorist might. If he hadn’t leaked the papers, Ellsberg would very probably have enjoyed a normal life of a high-ranking government official: good salary, good pension, the respect of his peers. In leaking the papers, he lost all of that. He knew for certain that he would pay this cost: nobody could leak top secret documents and go on working in the national security establishment. He also knew he had a very high chance of being imprisoned for a long time. He said he ‘assumed’ that he would spend the rest of his life behind bars. As it turned out, the Nixon administration’s overreach in trying to ensure this happened meant that the judge threw out the case. This overreach, however, exposed Ellsberg to an even more severe risk, as plans to assassinate him were considered. So, on the cost side, Ellsberg faced certainty of losing his career, high probability of long-term imprisonment, and some smaller chance of being killed.

On the benefit side, though, was something very large: an end to the war. Those millions of lives Ellsberg mentioned, and the willingness of the US military and government to go on fighting an unwinnable campaign that he exposed, suggested that millions more deaths would result from a continuation of the conflict. Therefore, ending the war would be a very good thing. But Ellsberg had no idea how far his leaking the Pentagon Papers would contribute to the end of the war. Perhaps the American public, when they saw the truth, would demand the government withdraw troops. (However, as Ellsberg himself noted, many Americans were already against the war, and the government didn’t seem to care.) Perhaps the leaks would humble Nixon and he would change his mind. Perhaps they would lead to mutiny amongst American soldiers. Perhaps they would increase the chances of an anti-war Democrat winning the 1972 election. Ultimately, Nixon’s response to the leaks led to Watergate, and then to his resignation, and then to US withdrawal. But it was a long shot: and the chances of the leaks ending the war were not only small, but also impossible to calculate. Even with hindsight, it is hard to say how far Ellsberg’s leaks hastened the end of the war, compared with the contributions of the Viet Cong, the mounting economic costs to America, the antiwar movement, Nixon’s missteps over Watergate, or international diplomacy. The leaks ending the war was the equivalent to getting a million pounds for drawing a black ball from Ellsberg’s urn, when there are thousands of balls in there and you don’t know how many are black.

So Ellsberg faced certainty of losing his career, high probability of long-term imprisonment, and a small risk of being murdered, versus a highly uncertain chance of ending the war. It is clear how EUT might justify his decision to leak: even if the chance of leaking ending the war was pretty low, he could have been right to do it, because a small chance of a very big win can outweigh certain or more probable smaller losses. However, a decision theory that is more sensitive to Ellsberg’s paradox, ironically, makes it less easy to justify Ellsberg’s decision. If we place more weight in the things we know (e.g. that leakers lose their career and likely go to jail) than the things we guess (e.g. that a leak could play some part in ending the war), then the costs loom larger than the benefits and the case against leaking looks stronger. If we care more about avoiding certain losses than achieving speculative gains, we might have recommended that Ellsberg looked after his career.

Some conclusions

So what lessons should we take from the work and life of Daniel Ellsberg? Firstly, if we agree with what Ellsberg did in leaking the Pentagon Papers, then it seems that even decision theories that are ‘ambiguity averse’ should allow people to pay certain or highly probable costs to do things that might have immense value. One way of putting this might be in terms of expected utility. A small probability multiplied by a very large amount of good equals a big number.

But another would be to abandon the idea that decisions always require calculations. Sometimes, Ellsberg justified what he did in terms of a trade-off: millions of lives versus one lifetime in jail. But sometimes he spoke of it as something he had to do, whatever the cost – and whatever the benefit. He was inspired by Randy Kehler, whom Ellsberg heard talk whilst Kehler was on his way to prison for resisting the draft. Kehler said he was ‘very excited’ to be going to prison, treating it not as a cost, but as a way of standing in solidarity with his friends and comrades. He didn’t think there was much expected benefit either: as Ellsberg wrote, “Randy Kehler never thought his going to prison would end the war.” It was simply the right thing to do.

So we might take the moral of Ellsberg’s story to be that decision theory, with its numbers and calculations, can only take us so far. I myself think that the scale and probability of consequences matter a lot. I think Ellsberg’s decision was justified by the possibility that it would shorten the war and by the number of lives at stake, and that morality does not demand that one martyrs oneself when there is no chance of success. But I am also happy that there are Randy Kehlers in the world: people who will stand up against injustice no matter the cost. If they inspire more of us to act with the bravery and selflessness of Daniel Ellsberg, those costs will likely be repaid.

By Nikhil Venkatesh

Dr Nikhil Venkatesh is an LSE Fellow in the Department of Philosophy, Logic and Scientific Method, and specialising in moral and political philosophy.

Special mentions

In memory of Daniel Ellsberg. Thanks to Richard Bradley and Tena Thau for comments on a draft version of this post.

Further reading

Richard Bradley (2017). Decision Theory With a Human Face. Cambridge University Press. https://doi.org /10.1017/9780511760105

Lara Buchak (2022). Normative Theories of Rational Choice: Rivals to Expected Utility Theory. The Stanford Encyclopedia of Philosophy (Summer 2022 edition), Edward N. Zalta (ed.). https://plato.stanford.edu/entries/rationality-normative-nonutility/

Daniel Ellsberg (1961). Risk, Ambiguity, and the Savage Axioms. The Quarterly Journal of Economics, 75 (4), pp. 643-699. https://doi.org /10.2307/1884324

David Smith (2021). ‘I never regretted doing it’: Daniel Ellsberg on 50 years since leaking the Pentagon Papers. The Guardian. https://www.theguardian.com/world/2021/jun/13/daniel-ellsberg-interview-pentagon-papers-50-years

Vert interesting article. I agree with EUT in principle, but in practice I often find it impractical. It’s useful when options are clearly defined and easy to analyze. But life hard problems rarely offer well defined set of options to choose from and even if they did, those options often require ability to reliably forecast far into the future, which most of us is not that good at. So I’m glad that you considered real life scenario in this article, it’s really helpful.

Thanks for the comment – that’s absolutely right, there are also epistemic difficulties with applying EUT. But you might think that we need some decision theory, even when the options and forecasts are very unclear, and a theory that works in simpler cases might help us in those more realistic ones.